Author: Dreadatour Source: http://habrahabr.ru/post/143102/

Company, where I spend all the time when I am not having a rest, has very severe security requirements. Tokens are used for user authentication wherever possible. So they gave me this thing:

and told: click the button, check the digits, enter the password and be happy. “Of course, security above all, but there still should be some place for comfort” – I’ve thought something like that and inspected all the electronic stuff I have.

(Honestly speaking, I have been expecting to take a break in programming and deal with hardware for a pretty long time, so my laziness was not the only thing that motivated me here).

Scope of work analysis and components selection

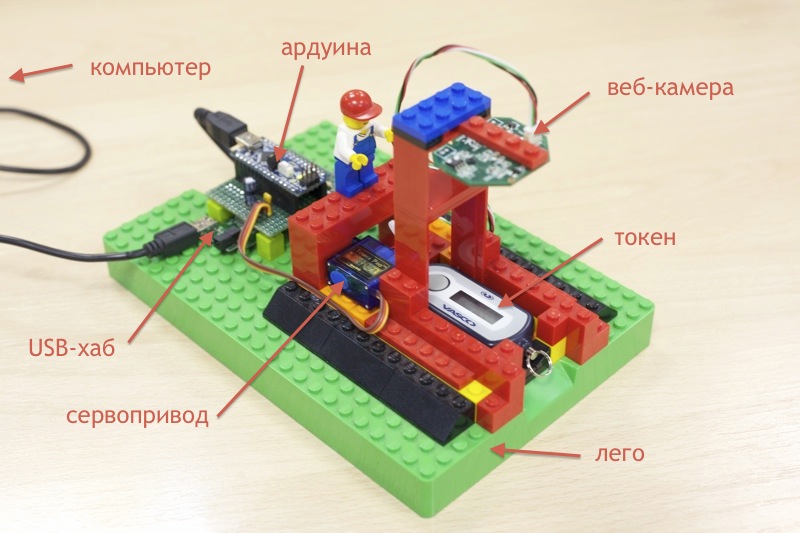

First of all I’ve found old webcam Logitech QuickCam 3000, which was “retired” after purchase of a laptop with embedded webcam. The plan came up in a wink: capture token digits with a webcam, recognize them with a computer…Profit! Then I’ve found a servo drive (too lazy to click token button manually every time, right?), old USB hub (my laptop has only two USP ports with one of them always occupied by USB-Ethernet adapter) and Arduino board (God knows how did it come to me). For housing I’ve used LEGO construction kit, bought in advance for my one year old kid (it seems that now I know the reason of my wife’s ironic smile at the moment of purchase).

Unfortunately, I don’t have the separate photos of donor devices, so I can impress you with assembled device only:

(Clockwise:Computer, Arduino, webcam, token, LEGO, servo drive, USB hub)

Principle diagram and microcontroller software

Actually, it is extremely simple (I am not even going to draw a diagram): USB hub with webcam and Arduino connected. Servo drive is connected to Arduino via PWM. That’s it. Ardoino source code is also trivial: github.com/dreadatour/lazy/blob/master/servo.ino Ardoino waits for ‘G’ symbol to the COM port and upon its receipt moves servo back and forward. Delay (500 ms) and servo slope angle were defined experimentally.

Selection of programming language and analysis of existing “computer vision” libraries

The only programming language which can be trusted with such a complicated challenge as “computer vision” is the blessed Python since it has almost out-of-the-box bindings to such a great library as OpenCV. Actually, let’s stop here.

Token code recognition algorithm

I am going to give the links to some parts of source code, responsible for the functionality being described – I believe that it is the optimal format of information display. All the source code can be checked at github.

First of all we capture an image from the webacam:

Making it easier: webcam is motionless relating to the housing, token can move very slightly, so we can find the limits for possible positions of the token, trim the webcam picture and (just for our own comfort) rotate an image for 90˚:

After that we make some conversions: convert the resulted image into grayscale and find its borders with Canny edge detector — it would be the borders of token LCD screen:

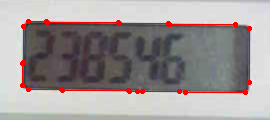

Finding contours on resulted image. Contour is composed of an array of lines – we remove the lines which are shorter than defined length:

Then we use straight-forward algorithm to define four lines of our LCD screen:

We find crossing points of these lines and make several checks: * We check that the length of vertical and horizontal lines is approximately the same and that total length of the lines is almost the same as LCD screen size (defined experimentally) * We check that diagonals are almost equal (we need a rectangle – LCD screen)

Then we define the turning angle of the token, turn the image for this angle and trim the LCD data:

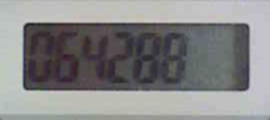

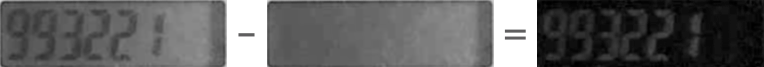

The most complicated is over. Now we have to make the resulted image more contrast. We put the image of blank LCD screen into the memory (before clicking the token button) and the we just “substract” this image from image with digits (after pressing the button):

So, we have a black and white image now. Now we use another one straight-forward algorithm to find the optimal threshold to divide the image pixels to “black” and “white”, convert the image into black and white and extract symbols:

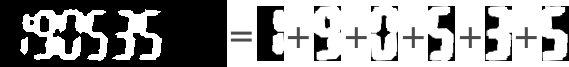

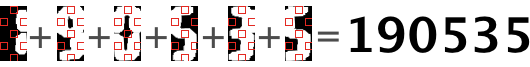

And after that we just recognize the digits. No need to bother with neural networks and other stuff, since we have 7-segment indicator – there are 7 “points” which uniquely define each digit:

Just to be safe we recognize the digits from several frames – if there is the same result for 3 frames in a row, then recognition is considered successful and we display the result to a user via “growlnotify” program and copy the resulted code in the clipboard.

Video – how device works

Warning, it is sound there!